On May 13, after a period of hype and controversy, Manus announced the lifting of invitation code restrictions and full opening of registration-marking the official entry into the second phase of the AI agent craze that began in March 2025.

Online celebrity model Manus measured: ice and fire are two days

According to Manus official announcement: 1. All users can register directly without waiting for the list 2. Each user can perform one task per day for free (worth 300 points) 3. All registered users will receive a one-time reward of 1000 points.

Behind Manus's open registration strategy lies clear business logic.

Previously, the scarcity created by Manus through invitation codes was due to both objective factors such as server capacity limitations and the classic "traffic explosion" rule of the Internet era.This strategy successfully pushed its valuation to US$500 million and received US$75 million in financing led by Silicon Valley venture capital Benchmark.

However, the limited edition model is ultimately difficult to support large-scale development-the combination of one free basic task (300 points) per day and three paid subscription plans (US$19 -199/month) marks its official shift to "user layering + value-added services" A mature business model.InvalidParameterValue

After the registration was opened, user feedback showed obvious "fault phenomenon."In the actual measurement, Manus demonstrated amazing "digital employee" characteristics: from the automatic generation of 19 documents on the Texas church construction plan to the independent construction of a Python virtual environment, its multi-agent collaboration architecture (planning, execution, verification) The agency division of labor does indeed achieve a "human-like workflow."Some developers have observed that Manus 'self-error correction ability during 40 minutes of continuous work has exceeded the time threshold of tools such as Cursor.InvalidParameterValue

But the complaints on the other side are equally sharp-problems such as frequent code generation errors, high manual intervention rate for complex tasks, and insufficient all-English interface and localization expose shortcomings in technological maturity.Especially when users compare it with the multimodal capabilities of Gemini 2.0, Manus's shortcomings in adapting to dynamic environments are more obvious.This gap stems from the market's high expectations for its "universal" positioning-despite excellent GAIA benchmark scores, long-tail problems in real scenarios still rely on preset scripts, which is cognitive deviation from the promoted "autonomous planning."InvalidParameterValue

From a macro perspective, the real challenge Manus faces comes from the strategic squeeze of global AI giants.OpenAI's upcoming "Doctoral Agents" and Google Gemini 2.0 's multimodal tool chain are building the infrastructure for agent development.

In contrast, Manus 'differentiation path lies in "vertical scenario penetration + workflow encapsulation": from resume screening to stock analysis, it integrates tool chains such as browsers and code editors to form an "AI pipeline covering 200+ scenarios."Although this "toolset-based innovation" lacks the aura of underlying technology, it is closer to the company's need to reduce costs and increase efficiency.InvalidParameterValue

The era of thirst for computing power: efficient models give birth to the myth of "money printing machines"

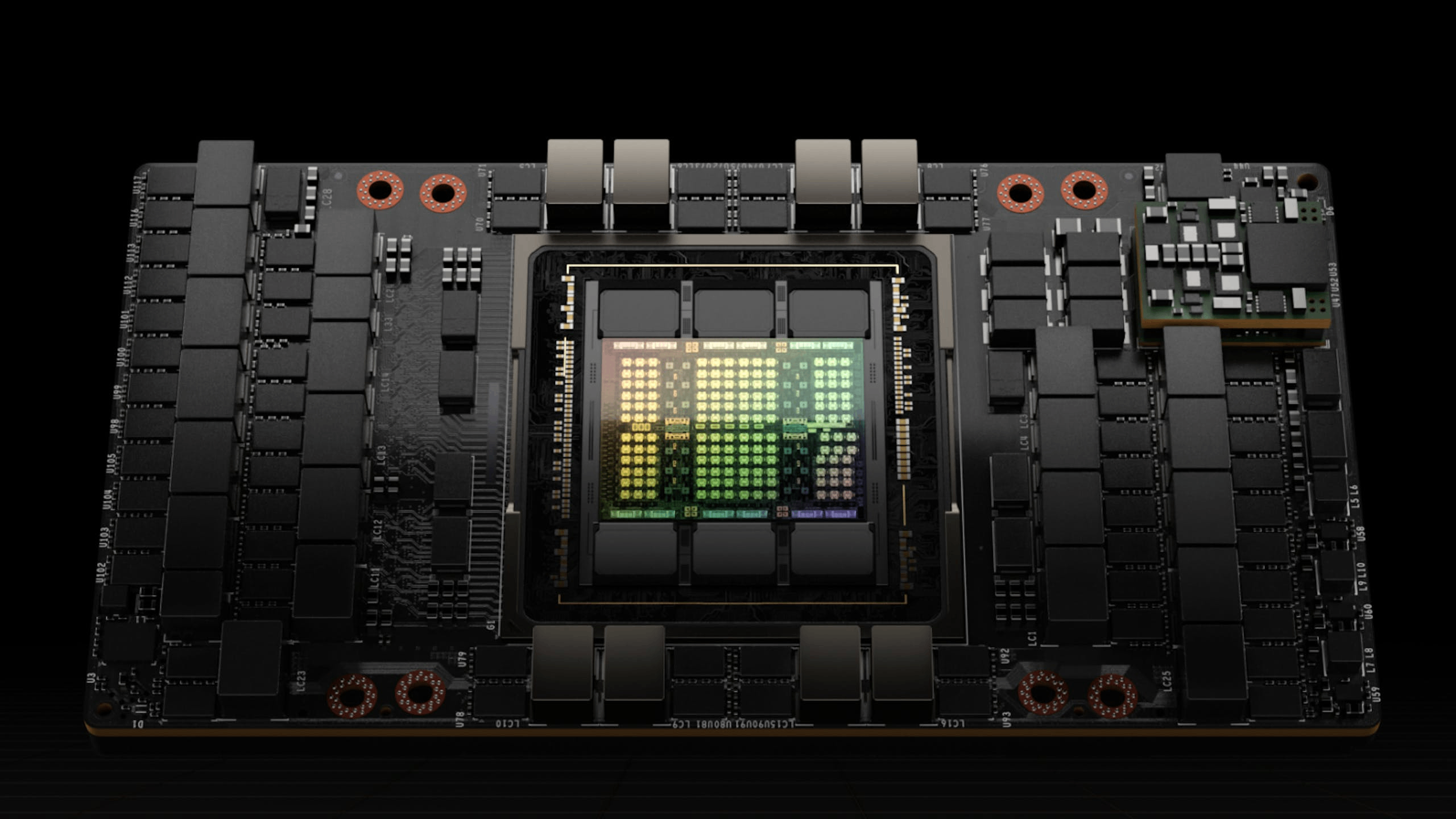

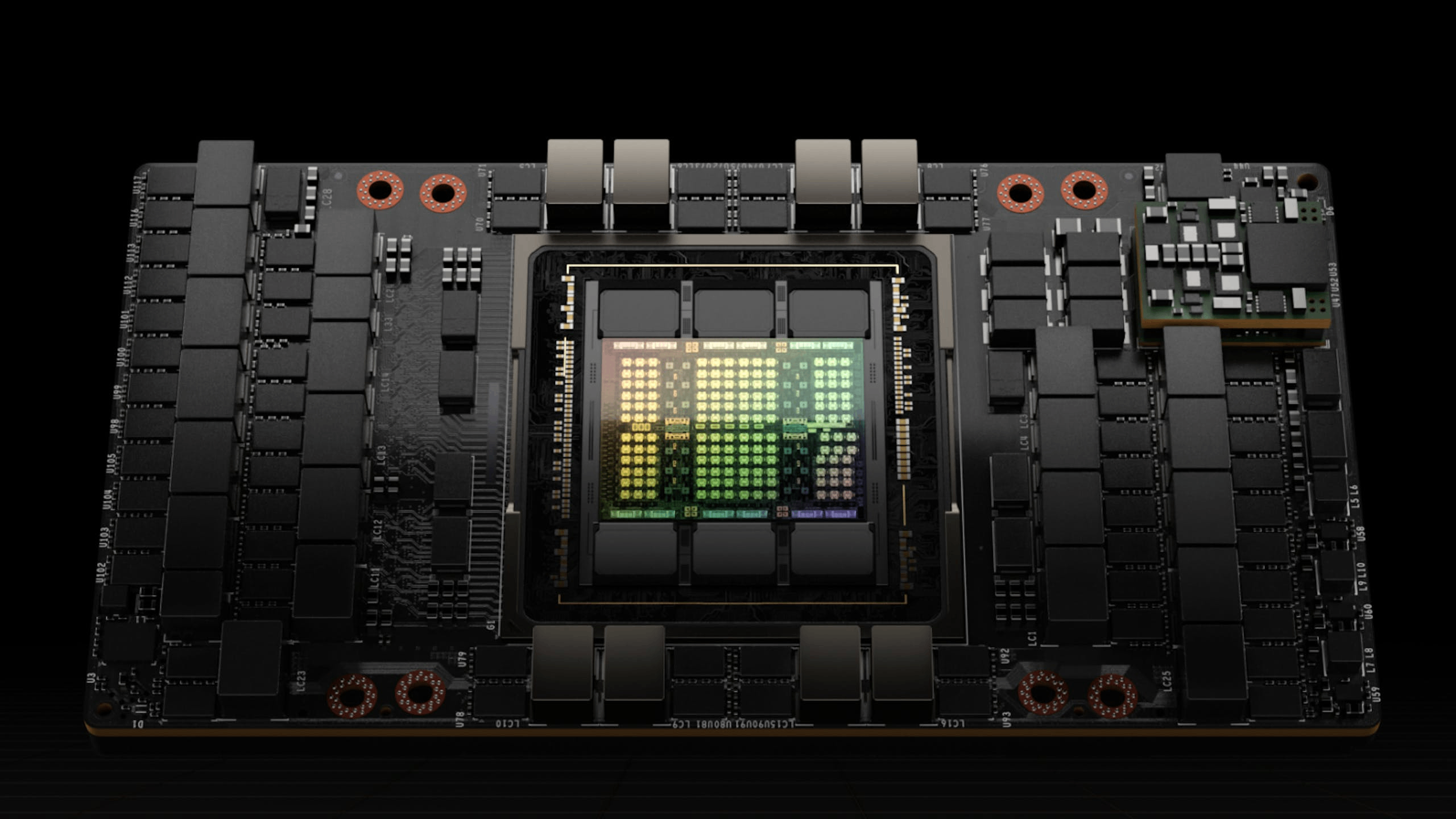

When global technology giants are racing to launch AI models with parameter sizes exceeding one trillion, a seemingly contradictory business logic is emerging in the computing power market-the improvement in model efficiency not only does not weaken computing power demand, but also pushes the entire industry into a deeper state of "computing power hunger".Behind this counter-intuitive phenomenon is the deep coupling between the evolution path of artificial intelligence technology and the semiconductor industry landscape.

Observing from the laws of technological iteration, large-scale model research and development has fallen into the double spiral of "parameter competition" and "efficiency optimization".Although model architecture improvements and algorithm optimizations have indeed improved the efficiency of a single computing task, the industry's ultimate pursuit of model performance has driven the parameter scale to expand at a rate of 10 times per year.OpenAI research shows that the training computing power requirements of head AI models double every 3-4 months, which far exceeds the chip performance improvement curve supported by Moore's Law.

More importantly, when the model parameters exceed the threshold of 100 billion, its "wisdom emergence" feature requires the amount of training data to increase exponentially simultaneously.The amount of training data for GPT-4 has reached TB level, and the unstructured data such as images and videos that the next generation of multimodal models needs to process will push data throughput requirements to a new level.This phenomenon of "efficiency dividends" being swallowed up by larger-scale models is known as the AI version of "Andy Beer's Law" in the semiconductor field-performance improvements brought about by software advances will always be consumed by more complex computing needs.

Structural changes in market demand are reshaping the underlying logic of the computing power industry.The market share comparison between traditional general computing power and intelligent computing power has undergone a historic reversal. Data from China Institute of Information and Technology shows that the scale of China's intelligent computing power has surpassed general computing power in 2022, and is expected to account for more than 65% of the overall computing power structure by 2026.

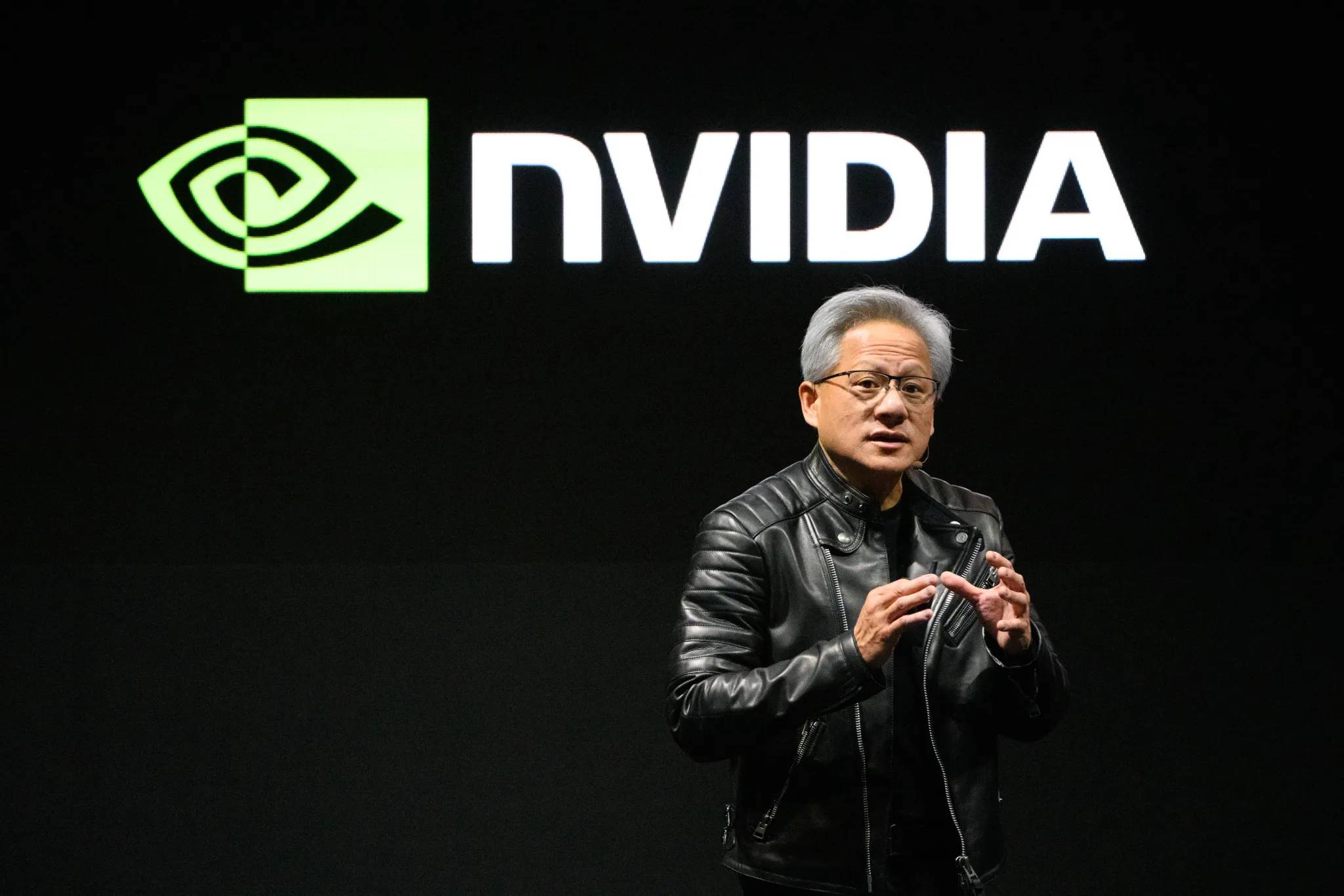

This shift is directly reflected in the hardware purchase list-a single DGX server equipped with eight H100 GPUs sells for more than US$250,000, but global technology companies are still eager to do so.In order to support Azure AI services, Microsoft contains tens of thousands of Nvidia accelerator cards in a single order; the second phase of Tesla Dojo supercomputing project plans to have a computing power of 100 Exaflops, equivalent to a cluster size of 300,000 A100 servers.

It is worth noting that the computing power demand brought about by the industrialization of large models has spread from the training end to the inference end.IDC predicts that by 2026, global AI inference workloads will account for 60% of data center computing power consumption, which means that even if model training is completed, continuous inference operations will still consume 30% of computing power resources equivalent to the training phase.

In an era of thirst for computing power, how should investors grasp the dividends of the times?

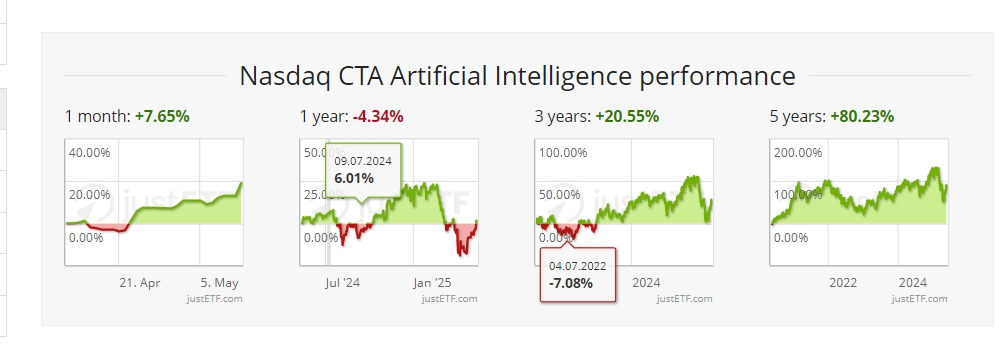

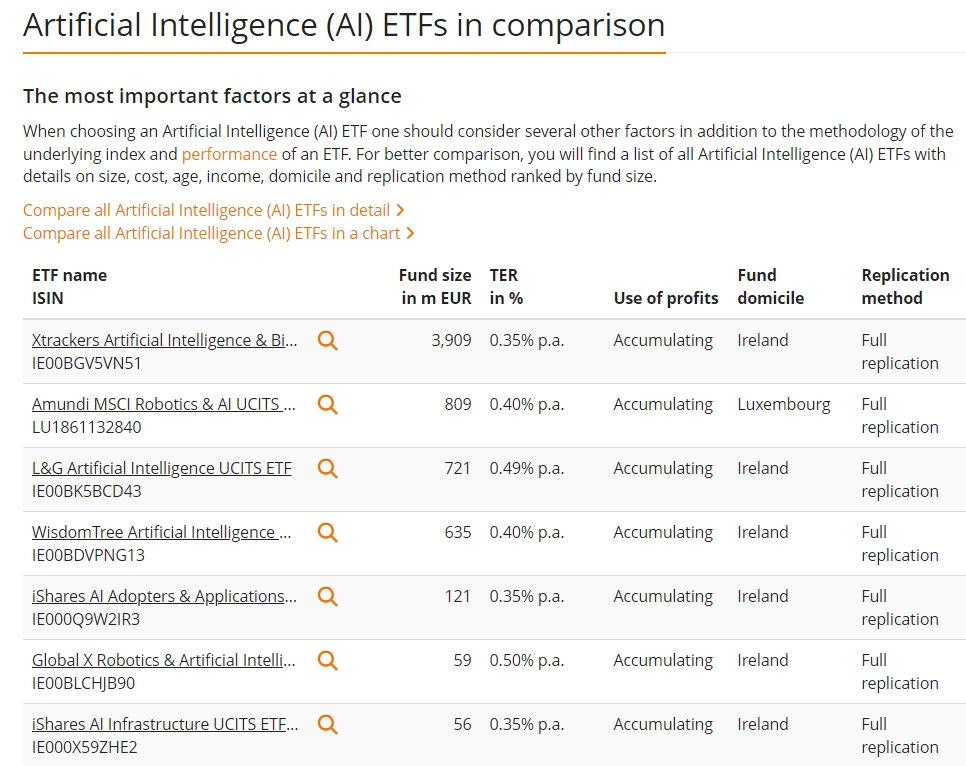

The stock prices of companies related to the concept of artificial intelligence are generally higher, such as NVIDIA, Oracle, Google, Microsoft, Meta, etc. The capital cost for ordinary investors to hold multiple stocks is higher.In contrast, artificial intelligence-related ETFs have the advantage of low funding barriers, and generally only costs more than 100 US dollars to purchase one piece (100 copies).

ETFs have a rich selection of products, covering upstream and downstream companies in the artificial intelligence industry chain. Investors can achieve risk diversification and share the dividends of industry development without in-depth research on individual stocks.In addition, ETFs have no risk of suspension or delisting, and can trade normally even in a bear market, providing investors with an opportunity to stop losses.Based on its advantages such as low threshold, transparent trading, rich selection, high stability and support for on-site trading, ETFs have become an ideal choice for ordinary investors and novice investors to participate in the artificial intelligence market.

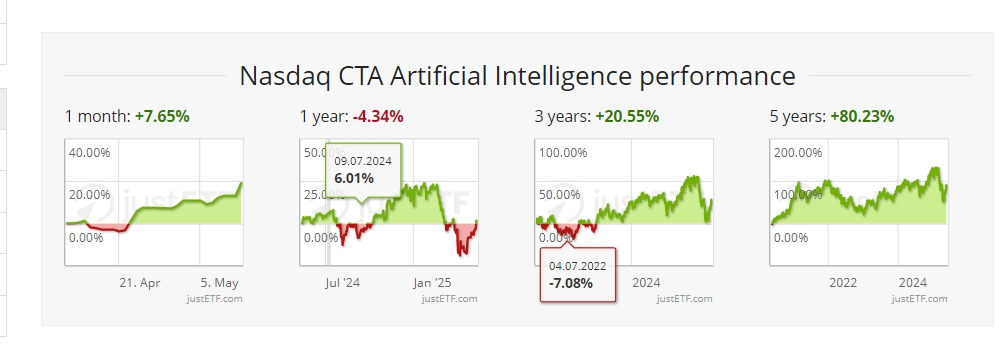

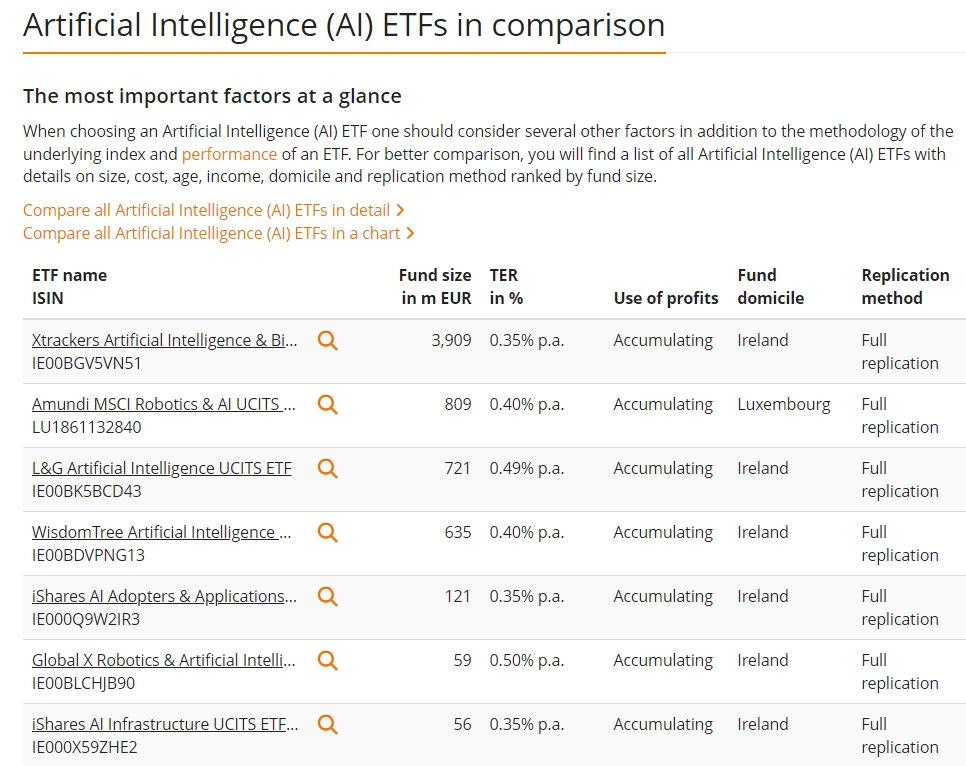

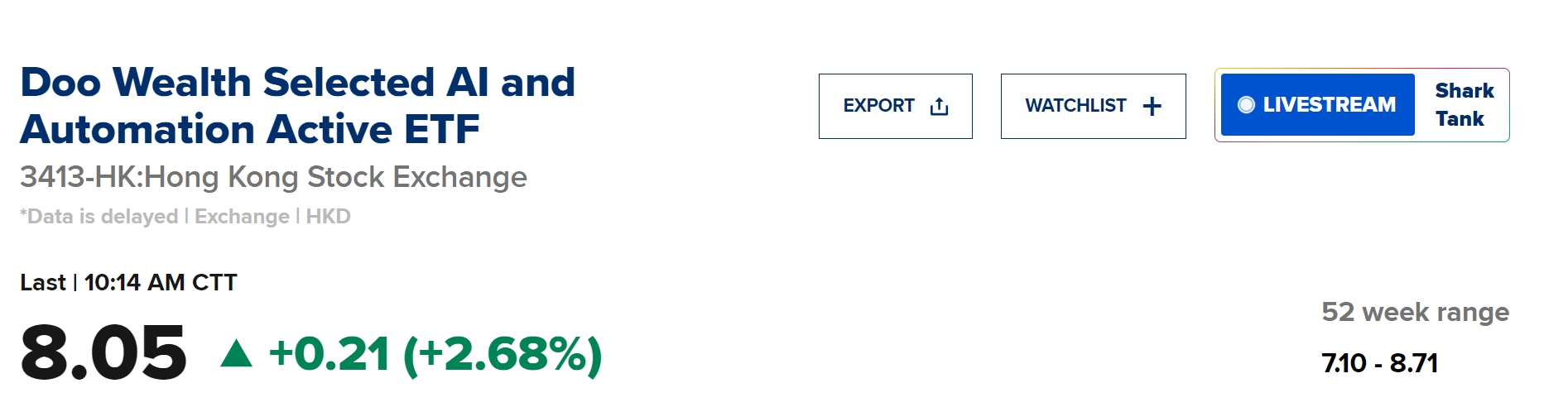

The following are some popular artificial intelligence ETF products on the market, for example only and no recommendations:

Venture capitalist Tomasz Tunguz said investors and large technology companies are betting that demand for AI models could increase by a trillion times or more over the next decade due to the rapid spread of inference models and AI.

I wish you all a smooth investment ~